Several readers have told me my blog renders very oddly at times, and that my passing appreciation of the elephant has become a front-page story. I'll look into this this evening. In the meantime, if you're not reading blogs using an RSS reader, get with the programme!

[UPDATE 2006-04-26 Hurrah! Problem seems to be fixed. An unfortunate combination of a possible blogger bug and a definite Internet Explorer wierdness now fixed.]

Wednesday, April 26, 2006

Tuesday, April 25, 2006

Jonathan gets the top job

So, Scott has decided to move on.

Like I said last year, I still don't know what Sun are for but it's nice to know that a geek can get to the top. Congratulations, Jonathan!

Like I said last year, I still don't know what Sun are for but it's nice to know that a geek can get to the top. Congratulations, Jonathan!

Monday, April 24, 2006

WSDL-first development

Jeff Schneider talks about the role of contracts and requirements in a service-oriented world.

I'm working on a project at the moment where we're facing very similar questions.

On the interface secification front, we're doing something what he suggests: where an interface doesn't already exist (i.e. where we need to work in top-down mode), we take the use-cases and UML sequence diagrams as input and identify the interface a service needs to expose.

We then generate a WSDL file. In our case, we use a nice property of WebSphere Integration Developer to speed us up: a WebSphere Process Server interface is a WSDL file so our product-specific tooling generates a globally-usable WSDL file automatically. This neatly gets round the problem of "do we do the WSDL first or the implementation-specific interface first?"

The second question he addresses is a little deeper: how do we deal with the "analysis impedance mismatch" between the "old" world of function-specific use-cases and the "new" world where we need to think in terms of reusable distributed services?

Again, we're doing something similar to his colleague: we have a document that identifies what operations each service must expose if we are to be able to meet the stated requirements. However, we add an extra step. We use WebSphere Business Modeler to allow the business analysts to draw end-to-end flows. For example, a particular business process flow would show how we provision a service all the way from the user logon to a portal to the invocation of the back-end systems.

The analysts use the tool in "basic" mode, where operations and data types are hidden. However, this model is then passed directly to the service composers - who switch to an "advanced" mode - where data types, interfaces and operations and conditions are shown. By analysing the flow, and questioning how to get from one step to the next, requirements on interfaces that wouldn't otherwise be obvious can be deduced. It is the use of this extra tool that allows us to simplify the handover from analysis to design.

Of course, if this was *all* we used the Modeler for then it would probably be overkill. Fortunately, this assistance in identifying interfaces drops out of its normal usage.

I'm working on a project at the moment where we're facing very similar questions.

On the interface secification front, we're doing something what he suggests: where an interface doesn't already exist (i.e. where we need to work in top-down mode), we take the use-cases and UML sequence diagrams as input and identify the interface a service needs to expose.

We then generate a WSDL file. In our case, we use a nice property of WebSphere Integration Developer to speed us up: a WebSphere Process Server interface is a WSDL file so our product-specific tooling generates a globally-usable WSDL file automatically. This neatly gets round the problem of "do we do the WSDL first or the implementation-specific interface first?"

The second question he addresses is a little deeper: how do we deal with the "analysis impedance mismatch" between the "old" world of function-specific use-cases and the "new" world where we need to think in terms of reusable distributed services?

Again, we're doing something similar to his colleague: we have a document that identifies what operations each service must expose if we are to be able to meet the stated requirements. However, we add an extra step. We use WebSphere Business Modeler to allow the business analysts to draw end-to-end flows. For example, a particular business process flow would show how we provision a service all the way from the user logon to a portal to the invocation of the back-end systems.

The analysts use the tool in "basic" mode, where operations and data types are hidden. However, this model is then passed directly to the service composers - who switch to an "advanced" mode - where data types, interfaces and operations and conditions are shown. By analysing the flow, and questioning how to get from one step to the next, requirements on interfaces that wouldn't otherwise be obvious can be deduced. It is the use of this extra tool that allows us to simplify the handover from analysis to design.

Of course, if this was *all* we used the Modeler for then it would probably be overkill. Fortunately, this assistance in identifying interfaces drops out of its normal usage.

People over Process

Bill Higgins talks about the welcome introduction of Coté to Redmonk.

Bill talks about the role of process in software development. I remember when I first joined IBM and was placed into a team that wrote the stress tests for our Message Broker product. I was fresh out of university where I had just won a distinction thanks, in part, to my programming project - which I had written in the archetypal single-coder, late-night, coffee and pizza style.

The culture shock was intense, to put it mildly.

Looking back on it, I realise I didn't have the maturity to see that managing a 100+ man development and test team, distributed over multiple continents and timezones, is different to writing some code in your bedroom. The development process was there for a reason - and it worked. The thing that frustrated me most - the apparently endless meetings and time taken to take a change from concept into product - wasn't slowed down by the process, but was enabled by it. Sure - things could have been done more quickly if the process hadn't been there but, six months later, there would have been utter chaos and we'd have never got anything out of the door.

I reflected on this as I read Bill's post. He argues that endless, mindless process is an enemy. Quite right. The only thing I'd add is that, in order to classify a process as mindless and choose to throw it out, you don't just need good people; you need experienced people.

Bill talks about the role of process in software development. I remember when I first joined IBM and was placed into a team that wrote the stress tests for our Message Broker product. I was fresh out of university where I had just won a distinction thanks, in part, to my programming project - which I had written in the archetypal single-coder, late-night, coffee and pizza style.

The culture shock was intense, to put it mildly.

Looking back on it, I realise I didn't have the maturity to see that managing a 100+ man development and test team, distributed over multiple continents and timezones, is different to writing some code in your bedroom. The development process was there for a reason - and it worked. The thing that frustrated me most - the apparently endless meetings and time taken to take a change from concept into product - wasn't slowed down by the process, but was enabled by it. Sure - things could have been done more quickly if the process hadn't been there but, six months later, there would have been utter chaos and we'd have never got anything out of the door.

I reflected on this as I read Bill's post. He argues that endless, mindless process is an enemy. Quite right. The only thing I'd add is that, in order to classify a process as mindless and choose to throw it out, you don't just need good people; you need experienced people.

Spooky maths problems

Cool post on the mainframe blog taking a potshot at non-mainframe hardware.

As someone who doesn't really have much exposure to mainframes, I found this post fascinating for a couple of reasons.

Firstly, I didn't know about that feature - and it's always nice to learn new things.

Secondly, and more importantly, this was a perfect example of taking a geeky train-spotter technical observation and making it relevant to the business. Here's something that no other platform has, and which I'm sure doesn't appear in anybody's ROI or TCO or performance calculations, but which could be utterly crucial in certain applications.

I'm sure I'm not alone in having assumed that all hardware would be as equally vulnerable to cosmic ray- or heat-induced mistakes. I didn't realise until now that there was a platform that explicitly protected you from them. Nice.

As someone who doesn't really have much exposure to mainframes, I found this post fascinating for a couple of reasons.

Firstly, I didn't know about that feature - and it's always nice to learn new things.

Secondly, and more importantly, this was a perfect example of taking a geeky train-spotter technical observation and making it relevant to the business. Here's something that no other platform has, and which I'm sure doesn't appear in anybody's ROI or TCO or performance calculations, but which could be utterly crucial in certain applications.

I'm sure I'm not alone in having assumed that all hardware would be as equally vulnerable to cosmic ray- or heat-induced mistakes. I didn't realise until now that there was a platform that explicitly protected you from them. Nice.

Sunday, April 23, 2006

How to moderate a panel discussion

I'm hosting a discussion on the topic of "Project Failure" at an IBM conference next week and have the tricky task of keeping a room of IBM WebSphere consultants under control.

I'm scheduling a call with a colleague whose opinions I respect in these matters and am perusing the web for ideas but thought I'd throw the topic open to my wise and generous readers.

Have any of you picked up good tips for successfully moderating panel discussions?

Comments, as always, are open.

I'm scheduling a call with a colleague whose opinions I respect in these matters and am perusing the web for ideas but thought I'd throw the topic open to my wise and generous readers.

Have any of you picked up good tips for successfully moderating panel discussions?

Comments, as always, are open.

Am I missing something?

I just read this useful article on Dennis Howlett's blog. It's about using RSS as an integration platform.

I haven't had time to follow all the links yet but it sounds like the idea is to use an RSS feed to "push" events from one application to another.

e.g. in the typical "Customer Sync" EAI scenario, rather than writing an event to an event table or publishing an event to a bus or writing a message to a queue, the "new customer" appears in a particular RSS feed instead. All interested applications subscribe to this feed and, hey presto, we're done!

The idea has many merits - RSS (superficially at least) is a simple way to do pub/sub and neither publisher nor subscriber would need to invest in any expensive middleware.

The areas that confuse me - and perhaps would be answered by following the links - are things like:

* My RSS feeds routinely miss items (e.g. if it's been a while since I last polled). I hope that wasn't your future best customer you forgot to sync to the billing system!

* My RSS feeds routiney double-up items. Better hope you didn't debit that guy's account twice!

* Unless I'm missing something, RSS isn't really "push" pub/sub. Rather: it relies on frequent polling by clients. That must place severe loads on the publishing servers.

I can't help thinking that the solution to these problems (and others) would lead us to re-discovering asynchronous messaging, a middleware tier and all the stuff we already have.

However, I'm prepared to suspend any cynicism and investigate a little further....

I haven't had time to follow all the links yet but it sounds like the idea is to use an RSS feed to "push" events from one application to another.

e.g. in the typical "Customer Sync" EAI scenario, rather than writing an event to an event table or publishing an event to a bus or writing a message to a queue, the "new customer" appears in a particular RSS feed instead. All interested applications subscribe to this feed and, hey presto, we're done!

The idea has many merits - RSS (superficially at least) is a simple way to do pub/sub and neither publisher nor subscriber would need to invest in any expensive middleware.

The areas that confuse me - and perhaps would be answered by following the links - are things like:

* My RSS feeds routinely miss items (e.g. if it's been a while since I last polled). I hope that wasn't your future best customer you forgot to sync to the billing system!

* My RSS feeds routiney double-up items. Better hope you didn't debit that guy's account twice!

* Unless I'm missing something, RSS isn't really "push" pub/sub. Rather: it relies on frequent polling by clients. That must place severe loads on the publishing servers.

I can't help thinking that the solution to these problems (and others) would lead us to re-discovering asynchronous messaging, a middleware tier and all the stuff we already have.

However, I'm prepared to suspend any cynicism and investigate a little further....

Saturday, April 22, 2006

Are WS-* specs too top down?

David Ing has a good post which essentially says that read-only operations are so common and so important that it is a good idea to treat them as a special case, which is what he claims GET does in the REST world.

It's a fair point but it seems to assume that the whole swathe of WS-* specs are necessary in all cases. If all you're doing is a straight query, you don't need WS-AtomicTransaction, WS-ReliableMessaging, WS-Notification or pretty much any of the other stuff. You just need to define what your request looks like, what you expect to get back (most often as a couple of XSDs) and describe how to ask the question. REST seems to do this by providing a URL which encodes the full identity of the resource being queried; WS-* does this by specifying the location of the server in one place and uses the concept of an 'operation' to specify the "question". There's not a great deal of difference at this level that I can see.

The biggest difference I see when perusing documentation is that the WS-* guys give you a WSDL and some XSDs and expect you to figure it out (or feed it to your tools). The REST guys give a nice example of what the XML flowing over the wire should look like. Sometimes that's *all* they give, which just scares me, but that's not a criticism of the entire approach - and the example that is often provided is fantastic.... it lowers the barrier to user experimentation significantly.

Where I think WS-* shows its value is when somebody then asks you to update the data you've just received or if you need to do it securely, for example. WS-* provides a way to call operations with side effects - and to do it in a security context, or transaction context, or whatever - using precisely the same model as the simple query. It's not that the "query" special case is hidden away inside this spaghetti of specs. Rather, the query is the simplest case and everything else builds on it where necessary.

It strikes me that one of the biggest weaknesses of WS-* is in PR: people seem to believe you need to understand the whole lot in order to achieve anything. Not true. I've certainly never read the WS-Notification spec or many of the others (I probably shouldn't admit that in my line of work but still...)

It's a fair point but it seems to assume that the whole swathe of WS-* specs are necessary in all cases. If all you're doing is a straight query, you don't need WS-AtomicTransaction, WS-ReliableMessaging, WS-Notification or pretty much any of the other stuff. You just need to define what your request looks like, what you expect to get back (most often as a couple of XSDs) and describe how to ask the question. REST seems to do this by providing a URL which encodes the full identity of the resource being queried; WS-* does this by specifying the location of the server in one place and uses the concept of an 'operation' to specify the "question". There's not a great deal of difference at this level that I can see.

The biggest difference I see when perusing documentation is that the WS-* guys give you a WSDL and some XSDs and expect you to figure it out (or feed it to your tools). The REST guys give a nice example of what the XML flowing over the wire should look like. Sometimes that's *all* they give, which just scares me, but that's not a criticism of the entire approach - and the example that is often provided is fantastic.... it lowers the barrier to user experimentation significantly.

Where I think WS-* shows its value is when somebody then asks you to update the data you've just received or if you need to do it securely, for example. WS-* provides a way to call operations with side effects - and to do it in a security context, or transaction context, or whatever - using precisely the same model as the simple query. It's not that the "query" special case is hidden away inside this spaghetti of specs. Rather, the query is the simplest case and everything else builds on it where necessary.

It strikes me that one of the biggest weaknesses of WS-* is in PR: people seem to believe you need to understand the whole lot in order to achieve anything. Not true. I've certainly never read the WS-Notification spec or many of the others (I probably shouldn't admit that in my line of work but still...)

Friday, April 21, 2006

How many system calls to serve a single webpage?

Groovy images on a zdnet blog that purports to demonstrate that Windows is "inherently" harder to secure than Linux. That may or may not true but I don't think the diagrams show what the author thinks they do. It is entirely possible they just show greater modularity in Windows.

Either way, the pictures are interesting and it could be a useful way to reason about such things if applied carefully.

[EDIT 2006-04-22. Corrected typo. Thanks for spotting it, Dave]

Either way, the pictures are interesting and it could be a useful way to reason about such things if applied carefully.

[EDIT 2006-04-22. Corrected typo. Thanks for spotting it, Dave]

"How you treat a waiter can predict a lot about character"

"Watch out for people who have a situational value system, who can turn the charm on and off depending on the status of the person they are interacting with." How true. Great article in USA Today.

Thursday, April 20, 2006

Links

Another WordPress convertee; I'm waiting until Andy regains his Google ranking...

Paul Thurrott has looked at Windows Vista and doesn't like what he sees. The most bizarre part of his article was where he mentions Windows XP Task Panels. I have absolutely no idea what one of these is. Anyone know?

Will Henry's readers get it right two quarters in a row?

Paul Thurrott has looked at Windows Vista and doesn't like what he sees. The most bizarre part of his article was where he mentions Windows XP Task Panels. I have absolutely no idea what one of these is. Anyone know?

Will Henry's readers get it right two quarters in a row?

What a surprise... a security system that does everything except secure its target

My friend Ben has been battling with the manufacturer of his car for some weeks now. He took it into a garage for a routine service and, whilst checking out a fault he had reported, they disconnected the battery. This reset his radio, which meant he had to enter a security code before he could use it again.

The only problem was: he doesn't have the security code and the garage that sold the car to him has gone out of business.

The new garage wanted to charge him a lot of money to "obtain" the code from the third party that is the custodion of the security numbers.

Being the geek that he is, he wrote a script to generate every possible combination and printed them out. He drove around with this list in the car and began to try each one in turn. He calculated that this would take him four years, since there is an enforced one-hour lockout between each bad attempt.

I nonchalantly asked at the weekend if there were any "dodgy sites on the internet" that he could go to - in the same way that one can unlock a cellphone that has been blocked from accessing any network other than the one that originally provided it.

Eventually, Ben downloaded a piece of free software and tried it out. He entered the radio's serial number and it printed out a code immediately. It worked. His radio is unlocked!

I suppose I shouldn't be surprised but it does make one wonder why purchasers consider such systems to be a selling point. They are nice revenue generators for the third-parties and do nothing to thwart a professional thief. All they do is inconvenience the person they are sold as helping. Bizarre....

The only problem was: he doesn't have the security code and the garage that sold the car to him has gone out of business.

The new garage wanted to charge him a lot of money to "obtain" the code from the third party that is the custodion of the security numbers.

Being the geek that he is, he wrote a script to generate every possible combination and printed them out. He drove around with this list in the car and began to try each one in turn. He calculated that this would take him four years, since there is an enforced one-hour lockout between each bad attempt.

I nonchalantly asked at the weekend if there were any "dodgy sites on the internet" that he could go to - in the same way that one can unlock a cellphone that has been blocked from accessing any network other than the one that originally provided it.

Eventually, Ben downloaded a piece of free software and tried it out. He entered the radio's serial number and it printed out a code immediately. It worked. His radio is unlocked!

I suppose I shouldn't be surprised but it does make one wonder why purchasers consider such systems to be a selling point. They are nice revenue generators for the third-parties and do nothing to thwart a professional thief. All they do is inconvenience the person they are sold as helping. Bizarre....

Arghhhh! Pet Store in AJAX

Bill Higgins and James Governor point to an interesting discussion about the impact of AJAX on server load.

I'm not really sure where to start on this one so I'll do it in the form of a bulletted list. I like bulletted lists

I'm not really sure where to start on this one so I'll do it in the form of a bulletted list. I like bulletted lists

- "Server load" needs to be defined. Session size? Number of requests to service an end-to-end flow? Total amount of data transferred? CPU cycles/MIPS per user session? Database queries per session? etc, etc, etc

- AJAX's use of client-side state would suggest that there is the potential for smaller session size and total data transferred but a higher number of requests of the server

- Experimentation and experience will tell

- Pet Store in AJAX? Bill, you are a bad man.

- Most of the debate is academic. AJAX is so clearly the way to go that the only question that matters is: how do we accurately size and scale deployments?

Wednesday, April 19, 2006

Unintended consequences of transparency

There are multiple websites in the UK offering house-prices sales data for free. You can type in the name of a road - or provide a postcode - and details of every property sale in the last five years are presented.

This is a great service for purchasers and sellers alike.

It does, however, present several problems. The most obvious is that it's no longer possible to brag dishonestly to friends. A seller cannot claim they achieved a better price than they did and a seller cannot claim they got the bargain of the century. Estate agents probably find such websites a pain since information they previously had exclusive access to is now available more widely.

It occurred to me last night, however, that the presence of such websites provides an unusually high incentive to overstate the price at which a property sold - and that this could make the data less useful than it first appears.

Imagine a vendor and purchaser have agreed a sale at £275k. Were they to structure the deal as a £280k purchase price, with a £5k cashback from the vendor to the seller, some interesting things emerge:

* The vendor gets to brag about getting a higher sale price

* The estate agent gets to brag about rising house prices and their sales prowess

* The mortgage company can report higher selling prices

* The purchaser has a higher baseline entered for their property into the online databases, which will help them when they come to sell

* The government makes more stamp duty revenue (3% of £5k = £150)

It is rare indeed to find such a convergence of interests.

Now, there would be losers: the purchaser would lose £150 and some bragging rights and future purchasers could be considered to have been misled over the real price. The first could be considered a trade worth making in exchange for the benefits it provides (and the cost could easily be split with the other parties who stand to gain). The second is a clear externality - and, as such, wouldn't change the equation for those participating directly in the trade.

So, my question is: is such a thing legal? I'm struggling to articulate a clear reason why it wouldn't be.

Assuming it is legal, is it really realistic to assume somebody else hasn't already spotted this? If not, presumably it is happening all the time.

My supplementary question, therefore, is: how would one detect such behaviour and is there any way to deduce the true selling price for a property from the publicly available information?

This is a great service for purchasers and sellers alike.

It does, however, present several problems. The most obvious is that it's no longer possible to brag dishonestly to friends. A seller cannot claim they achieved a better price than they did and a seller cannot claim they got the bargain of the century. Estate agents probably find such websites a pain since information they previously had exclusive access to is now available more widely.

It occurred to me last night, however, that the presence of such websites provides an unusually high incentive to overstate the price at which a property sold - and that this could make the data less useful than it first appears.

Imagine a vendor and purchaser have agreed a sale at £275k. Were they to structure the deal as a £280k purchase price, with a £5k cashback from the vendor to the seller, some interesting things emerge:

* The vendor gets to brag about getting a higher sale price

* The estate agent gets to brag about rising house prices and their sales prowess

* The mortgage company can report higher selling prices

* The purchaser has a higher baseline entered for their property into the online databases, which will help them when they come to sell

* The government makes more stamp duty revenue (3% of £5k = £150)

It is rare indeed to find such a convergence of interests.

Now, there would be losers: the purchaser would lose £150 and some bragging rights and future purchasers could be considered to have been misled over the real price. The first could be considered a trade worth making in exchange for the benefits it provides (and the cost could easily be split with the other parties who stand to gain). The second is a clear externality - and, as such, wouldn't change the equation for those participating directly in the trade.

So, my question is: is such a thing legal? I'm struggling to articulate a clear reason why it wouldn't be.

Assuming it is legal, is it really realistic to assume somebody else hasn't already spotted this? If not, presumably it is happening all the time.

My supplementary question, therefore, is: how would one detect such behaviour and is there any way to deduce the true selling price for a property from the publicly available information?

Tuesday, April 18, 2006

What does 'reliable' mean?

Bobby Woolf discusses WS-ReliableMessaging here. His post reminded me of a conversation I had with a colleague a couple of weeks ago. He was specifying some standards to be used on a large project and wanted to turn his intuitive understanding of the word "reliable" into a prescription for what technology to use.

The scenario was a synchronous request/response interaction. SOAP/HTTP isn't reliable. The request might not get there. The request might get there but the response might get lost in transit (e.g. one or other party crashes at just the wrong time). Maybe the response would get back if it were allowed to but you have timed out. In short, how do you know whether your request has been actioned and whether is has been actioned successfully?

An obvious answer to this is to use asynchronous messaging: put the request on a queue, trust the messaging infrastructure to deliver it (or tell you that it can't). Wait for the response. If you or the recipient die at any point or if the network goes down at an inopportune moment, who cares? The messaging layer will take care of it all for you.

The colleague's problem was that he needed to be vendor-agnostic and "internet-friendly". JMS wasn't on the cards and WS-RM doesn't cut it yet. So, what can we do?

The answer in this case was quite nice: what we want in this situation isn't really "reliability"... it's "certainty". We just want to know if it worked or not. The correct tool in our kit bag for this problem isn't asynchronous messaging. It's transactionality.

Consider the problem again:

There's an operation we want to perform. We really need to know if it happened or not (we can't risk it happening twice or not at all). Unfortunately, we have to assume that the communication could fail at any point and that the application at either end could crash at any time.

The problem with the non-transactional, non-queued request-response pattern is that, as soon as we make the request, we have to pray. The operation may happen or it may not. We may get to find out or we may not.

Now consider the transactional case: we make the request... but we're implicitly saying: "try to do this but don't make it permanent yet." If we get a response then everything's great, we can go ahead and ask the transaction coordinator to make it permanent ("commit") - or just forget about it if there was a problem. But now consider what happens if something goes wrong after we've made the initial request. We don't know if the operation was successful or not. But we no longer care.... we know it hasn't happened because we never committed it. When everything comes back up, the transaction coordinator will take care of tidying up all the junk that's lying around and we can just go ahead and try again.

It wasn't WS-ReliableMessaging he wanted; it was WS-AtomicTransaction.

Maybe the old-timers (if it's safe to call Danny Sabbah an old timer...) are right.... there really is nothing new in IT.

The scenario was a synchronous request/response interaction. SOAP/HTTP isn't reliable. The request might not get there. The request might get there but the response might get lost in transit (e.g. one or other party crashes at just the wrong time). Maybe the response would get back if it were allowed to but you have timed out. In short, how do you know whether your request has been actioned and whether is has been actioned successfully?

An obvious answer to this is to use asynchronous messaging: put the request on a queue, trust the messaging infrastructure to deliver it (or tell you that it can't). Wait for the response. If you or the recipient die at any point or if the network goes down at an inopportune moment, who cares? The messaging layer will take care of it all for you.

The colleague's problem was that he needed to be vendor-agnostic and "internet-friendly". JMS wasn't on the cards and WS-RM doesn't cut it yet. So, what can we do?

The answer in this case was quite nice: what we want in this situation isn't really "reliability"... it's "certainty". We just want to know if it worked or not. The correct tool in our kit bag for this problem isn't asynchronous messaging. It's transactionality.

Consider the problem again:

There's an operation we want to perform. We really need to know if it happened or not (we can't risk it happening twice or not at all). Unfortunately, we have to assume that the communication could fail at any point and that the application at either end could crash at any time.

The problem with the non-transactional, non-queued request-response pattern is that, as soon as we make the request, we have to pray. The operation may happen or it may not. We may get to find out or we may not.

Now consider the transactional case: we make the request... but we're implicitly saying: "try to do this but don't make it permanent yet." If we get a response then everything's great, we can go ahead and ask the transaction coordinator to make it permanent ("commit") - or just forget about it if there was a problem. But now consider what happens if something goes wrong after we've made the initial request. We don't know if the operation was successful or not. But we no longer care.... we know it hasn't happened because we never committed it. When everything comes back up, the transaction coordinator will take care of tidying up all the junk that's lying around and we can just go ahead and try again.

It wasn't WS-ReliableMessaging he wanted; it was WS-AtomicTransaction.

Maybe the old-timers (if it's safe to call Danny Sabbah an old timer...) are right.... there really is nothing new in IT.

Failure

I'm moderating a roundtable at an internal conference in a few weeks around the subject of failure - I want to stimulate a debate about why some projects may fail to deliver on their initial promise and what we can do about it. Of course, there is a whole heap of analysis of this stuff and process to stop it happening already but I figured it would be fun to get a group of techies into a room and kick the issue around for an hour :-)

In the course of my preparation this afternoon, I notice that David Ing is presenting on something similar (although focussed on architecture) at Microsoft's TechEd this year. There's obviously something in the water...

In the course of my preparation this afternoon, I notice that David Ing is presenting on something similar (although focussed on architecture) at Microsoft's TechEd this year. There's obviously something in the water...

Don't worry, Raymond, it's not just you...

Raymond Chen is not alone. The verbification of perfectly harmless nouns is alive and well in IBM, too.

Two different IT industry buzzwords coined in the course of twenty minutes

"Test Driven SOA"..... "Batch Driven SOA"..... not bad going, guys.

RedMonk's sixth PodCast is well worth a listen.

All in all, an interesting discussion.

RedMonk's sixth PodCast is well worth a listen.

- The increasing importance of sharing links to good content - and adding a short description. Can't disagree with that.

- A good discussion of "GreenHat" Consulting's Test Driven SOA concept. They seem rather Tibco-centric but I won't hold it against them...

- "SOA is the new spelling of CORBA". If you consider SOA only as a technology thought then I guess you could attempt to make this argument. I think there's an increasing acceptance, however, that SOA is something that the business should be interested in

- "Connectors and agents bad". I'm not so sure... I think they still have their place. For example, there is a particularly sweet spot for connectors when you need to detect events in an application that doesn't know anything about asynchronous messaging or how to make calls out to interested parties. I haven't seen a good way to do this other than with locally-deployed polling connectors. It offends one's sensibilities but it's very often the least worst option.

- "24/7 running for SOAs", "Batch driven SOA". These are interesting thoughts. At the technical level, one of the goals of an SOA is to hide details of implementation. A potential problem then arises if you can't guarantee that the service implementing the functionality is up all the time... your abstraction has leaked before you've started. There are well-tested patterns for this sort of stuff (asynchronous interactions, the use of an ESB to intermediate, etc) but it comes down to problem of service meta-data (or whatever you choose to call it): as well as documenting an interface, you need to publish its availability, performance characteristics, transaction support, etc, etc - and that should also form part of the contract

All in all, an interesting discussion.

Sunday, April 16, 2006

Security matters

Eric Marvets links to his presentation on Microsoft's Security Development Lifecycle. It's easy to be cynical about Microsoft's activities in this area (and I certainly was when they first began their Trustworthy Computing initiative) but I prefer to assume they're serious these days. The bad guys are certainly paying attention: their growth strategy is to look elsewhere for the low-hanging fruit (as Oracle are already finding to their cost).

A lot of my clients deploy onto Windows (sometimes using Oracle, for that matter) so I'm happy to see so much focus in this area.

A lot of my clients deploy onto Windows (sometimes using Oracle, for that matter) so I'm happy to see so much focus in this area.

It's not just me that thinks inflation is far higher than the published figures

I was discussing inflation with a couple of colleagues last week... our experiences of rising prices seemed to be completely at odds with the official figures. I first noticed this in November last year when my dry cleaning bill shot up and one of the items I discussed last week was the perceived increase in cost of various items at Top Man (e.g. semi-disposable sunglasses up £2 since last year, etc).

It seems we weren't the only ones to notice. David Smith at the Times has spotted something similar.

His take is that the inflation figure is completely misleading as the massive price deflation for goods like cameras and computers if offsetting the very real rise in prices for services. I think he's probably right. However, that doesn't explain my, admittedly trivial, sunglasses observation. Perhaps it's an effect of the increase in prices for Chinese goods that our rulers so selflessly negotiated for us last year?

It seems we weren't the only ones to notice. David Smith at the Times has spotted something similar.

His take is that the inflation figure is completely misleading as the massive price deflation for goods like cameras and computers if offsetting the very real rise in prices for services. I think he's probably right. However, that doesn't explain my, admittedly trivial, sunglasses observation. Perhaps it's an effect of the increase in prices for Chinese goods that our rulers so selflessly negotiated for us last year?

Thursday, April 13, 2006

David Chappell on SCA

David Chappell makes some good points about the Service Component Architecture (this link doesn't work in Firefox for me for some reason.... it's OK in IE though).

I see SCA as providing the technical underpinnings of an SOA-enabled runtime. I still mean to update my description of it. Until I do, here's my old one.

I see SCA as providing the technical underpinnings of an SOA-enabled runtime. I still mean to update my description of it. Until I do, here's my old one.

There's nothing wrong with 31 bits...

PolarLake's Warren Buckley says that IBM released 31 new SOA products and wonders what the unwritten 32nd was.

My story is that the 31 is a homage to System/370 and I'm sticking to it :-p

Incidentally, one of the 31 was a new version of WebSphere Portal, with support for Ajax.... cool!

My story is that the 31 is a homage to System/370 and I'm sticking to it :-p

Incidentally, one of the 31 was a new version of WebSphere Portal, with support for Ajax.... cool!

Sunday, April 09, 2006

Things that cannot be ignored

Ian Hughes over at eight bar makes some good observations about online gaming. (He also has a followup post here)

I linked to a fascinating podcast about online gaming the other week in a posting that was really intended to welcome RedMonk to the world of PodCasting (I'm never sure.... should I spell Coté's name with an acute accent on the 'e' or with a trailing apostrophe? Love the clips, guys but James..... you've got to let Coté get a word in edgeways.) Anyway, I still recommend readers listen to the RadioEconomics clip.... the interviewee makes some insightful points.

This emerging, and interesting, area of computing unsettles me... I just hate the thought that all those friends of mine who spend hours gaming are actually doing something valuable.

I linked to a fascinating podcast about online gaming the other week in a posting that was really intended to welcome RedMonk to the world of PodCasting (I'm never sure.... should I spell Coté's name with an acute accent on the 'e' or with a trailing apostrophe? Love the clips, guys but James..... you've got to let Coté get a word in edgeways.) Anyway, I still recommend readers listen to the RadioEconomics clip.... the interviewee makes some insightful points.

This emerging, and interesting, area of computing unsettles me... I just hate the thought that all those friends of mine who spend hours gaming are actually doing something valuable.

Thursday, April 06, 2006

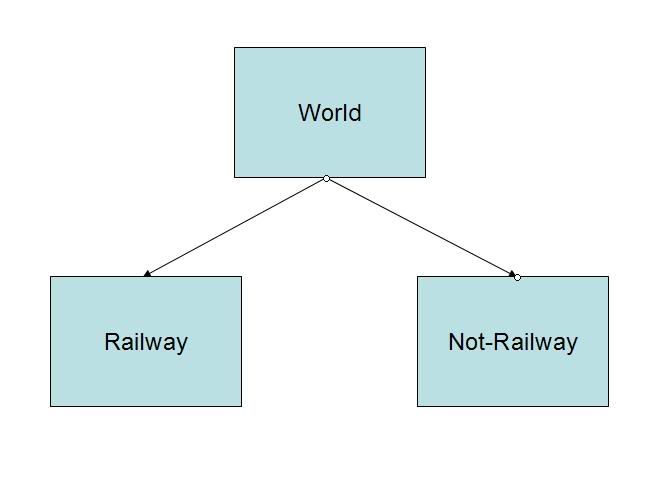

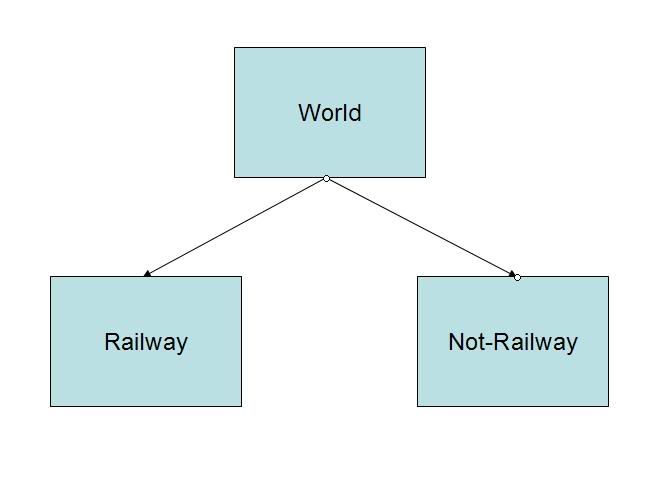

Best. Diagram. Ever.

I attended a talk at the IET last night about the East London Line project. Looking, as we are, for a flat in Wapping or Rotherhithe, the future of that line is rather important to me :-)

The talk was rather technical but was made worthwhile by the inclusion of the best diagram in the history of the world. The presenter was attempting to explain how he has modelled the project and reasoned about all the stakeholders and interested parties. The diagram below shows the first stage of his decomposition. I'm not saying railway folk see things in black and white but you could easily be forgiven for thinking you're either with them or against them...

The talk was rather technical but was made worthwhile by the inclusion of the best diagram in the history of the world. The presenter was attempting to explain how he has modelled the project and reasoned about all the stakeholders and interested parties. The diagram below shows the first stage of his decomposition. I'm not saying railway folk see things in black and white but you could easily be forgiven for thinking you're either with them or against them...

Wednesday, April 05, 2006

Do you still believe anything your history teachers taught you?

Bobby Woolf discusses a show that has yet to hit this side of the pond.

It appears that the show is based around the idea of taking an episode from America's history and asserting that things were actually somewhat different. I can think of several areas of British history that might benefit from this approach.

It appears that the show is based around the idea of taking an episode from America's history and asserting that things were actually somewhat different. I can think of several areas of British history that might benefit from this approach.

Product Updates

Andy's done the deed for Broker and Community Edition of the App Server so I suppose I'd better do the same for my part of the world....

WebSphere Process Server 6.0.1 Fix Pack 1

WebSphere Process Server 6.0.1 Fix Pack 1

Tuesday, April 04, 2006

The best description of Opportunity Cost I've read

http://divisionoflabour.com/archives/002456.php (although I did have to read it three times before I got the point...)

Websites which used to be good but are now pants...

DiamondGeezer asks a good question and points to a perfect example.

The world is hardly short of such examples...

The world is hardly short of such examples...

Sometimes discussions about naming can be helpful

James Governor pointed me at this excellent article which attempts to untangle the concepts of "Data marts" and "Data warehouses". I found it very helpful indeed.

I used to have very little time for debates over what we name things but such debates, run carefully, can be very useful at ensuring the participants completely understand the concepts. Jeff's piece is a perfect example of this in action. I started thinking about this when I blogged that I didn't think there was a difference between "Orchestration" and "Choreography" a few weeks back. I still don't think there's a real difference but there's a fascinating comment in there by Steve Ross-Talbot which has helped sharpen up how I describe some concepts.

I used to have very little time for debates over what we name things but such debates, run carefully, can be very useful at ensuring the participants completely understand the concepts. Jeff's piece is a perfect example of this in action. I started thinking about this when I blogged that I didn't think there was a difference between "Orchestration" and "Choreography" a few weeks back. I still don't think there's a real difference but there's a fascinating comment in there by Steve Ross-Talbot which has helped sharpen up how I describe some concepts.

Subscribe to:

Posts (Atom)