Microsoft's wonderful Raymond Chen discussed

the evils of polling a few weeks back. He is, of course, correct that it's usually a bad idea. My rule of thumb when considering such scenarios is that if something intuitively feels wrong then it usually is. And polling feels

wrong.

However, I think it's possible to be

too disdainful of polling. In particular, I am increasingly of the opinion that it can be used in judiciously-chosen scenarios to help build significantly more robust systems than would otherwise be possible.

The example I have is from the Enterprise Application Integration world. Assume we have a monolithic application which is supporting some business function.

For whatever reason, we need to know when various tasks have been performed ("new customer record created", "purchase order modified", etc). When such an event occurs, we need to send the information to some other system. This is the bread-and-butter of integration. Call it EAI, BI, ESB, whatever.

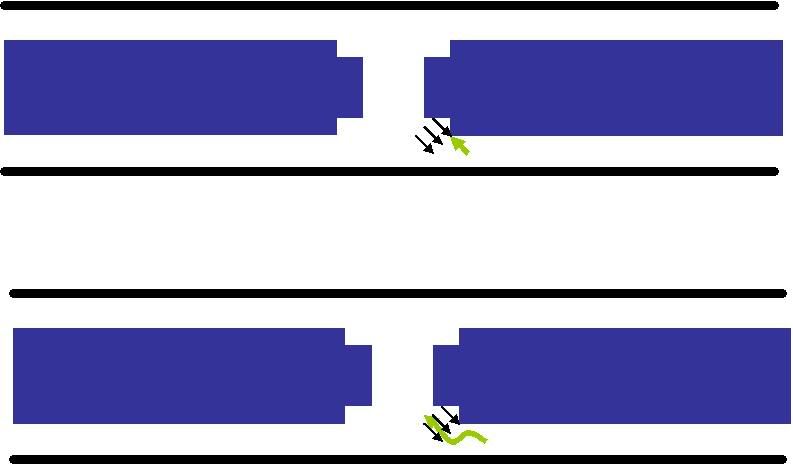

Now, how do we detect such events? There are two broad approaches. We can configure the source application to

notify the integration layer when something of interest has happened (e.g. by sending an event into the bus, by calling a web service, by placing a message on a queue... whatever). Or we can configure the integration layer to somehow

detect the event.

The latter is most often achieved by configuring the source application to record than an event has occurred (e.g. in one of its database tables) and for the integration layer to periodically check this event store. i.e. we configure the integration layer to poll.

Who, in their right mind, would ever regard the latter as a reasonable approach?

Well..... me, quite often. And here's why.

The former approach has plenty of advantages: real-time notification of an event, no polling, the event flow is clear, etc, etc. But... it also has two potential drawbacks. The first is that the source application has to be configured to talk to the integration layer. In most cases this is no trouble at all but in some situations (especially with older, bespoke software) it is quite difficult. Worse, this approach has added a dependency to the source application. It now requires the correct functioning of (part of) the integration layer - or must have sophisticated error handling added to deal with the times when the integration layer is unavailable. So, high availability scenarios have been complicated. Considerations such as these can make achieving buy-in from application owners to an integration project much harder.

The polling scenario has plenty of obvious disadvantages (polling can be costly, polling can introduce latency, etc, etc). But it has a very nice advantage: we have added no dependency to the source system; it can generate events that the wider scenario requires without complicating its own operations. This is since the integration infrastructure (perhaps in the form of an adapter) is making a call

to the application, rather than listening for a call

from the application.

My view is that polling can often be considered a transitionary state: get a project working and demonstrate value to the business sponsors and the application owners. Once the integration infrastructure has been proved (and has been shown to have higher reliability than the applications with which it integrates!), resistance to modifying the applications to call the integration layer often evaporates. Polling performs a valuable role in allowing us to get to that point

and in solving problems where direct notification is unachievable.

So, no. Polling is

not always evil. It is merely

usually evil...